About the Project

The objective of the project is to generate 3D avatars with speech-driven gestures and visualize the results in the dome present at the Visualiseringscenter C in Norrköping. The project pipeline is divided into four subsystems, which are listed below:

- Generating Text using GPT-SW3

- Text-to-Speech (TTS)

- Gesture Generation

- Visualizing Gestures in Unreal Engine

During the WASP summer school 2022, the students were working in groups to develop a creative fictional storyline using language models and prompt engineering. The stories are converted to audio (WAVs) and gestures (BVHs) using the recent TTS and gesture models. Finally, the audio and gestures are loaded in the Unreal Engine and are assigned to a sequence with different avatars based on the characters in their stories using Sequencer.

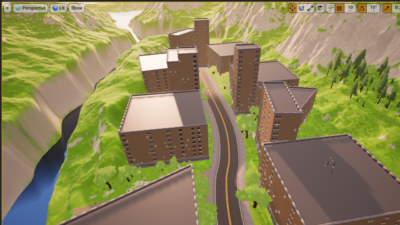

Scene Description

- 3D scene of an urban landscape surrounded by mountains and a river.

- Avatars created for the stories

![]()

Link to the Project Presentation: Presentation

Link to the Project repository: GitHub link

Project Results

After receiving the submissions from the participants during the summer school, we have rendered their stories from Unreal Engine into videos.